Introduction

On November 2022, ChatGPT broke into everybody’s lives.

Large Language Models (LLMs), like OpenAI’s GPT-3 and GPT-4, are a type of artificial intelligence (AI) technology. AI is a broad term encompassing a range of technologies designed to mimic human intelligence in some way, including understanding and generating natural language, recognizing patterns, making decisions, and learning from experience.

LLM (Large Language Model) is not a new technology. But what OpenAI did with ChatGPT was open LLMs for general usage without knowing all the complex mathematics and algorithms.

A prompt refers to the input text that you provide to the model. It’s essentially the instruction or question you give the AI, which it uses to generate a response.

LLMs like OpenAI’s ChatGPT and Google Bard are trained on diverse internet texts and thus have a wide array of capabilities, though their efficacy can vary based on the specifics of a given task.

Here are some areas where they tend to excel:

Text Generation and Completion.

Question Answering.

Translation.

Summarization.

Coding.

Tutoring.

And much more.

If we apply those capabilities to CTFs, it’s a game changer.

What are CTFs?

CTF stands for “Capture The Flag,” a type of competition commonly used in the field of cybersecurity, although it can also refer to other forms of problem-solving contests. These events are designed to serve as learning platforms and a way to test and improve one’s skills.

In cybersecurity, CTFs typically involve participants or teams trying to find and exploit vulnerabilities in a given system, with the objective of “capturing” a flag, which is usually a specific piece of information or a particular file. The flag is then submitted to the event organizers for points. The team or individual with the most points at the end of the event is declared the winner.

One of the most common formats is Boot2Root.

The primary goal of a Boot2Root challenge is to exploit vulnerabilities in a system to gain root access, essentially the highest level of access or control over the system.

The Role of LLMs in CTF Challenges

Navigating a Boot2Root challenge often leads to moments of being stumped. During these moments, we typically turn to our reliable ally — Google. Our search queries quickly fill up with questions like, “How can I scan this…?”, “What’s the method to crack this hash…?”, or “How can I leverage this SUID file to gain root privileges?”, and many more.

A crucial aspect of these searches is the learning process. When you find an answer, it’s not just about copying and pasting a solution to overcome the immediate challenge. It’s about absorbing the knowledge behind the solution and developing a deep understanding that you can apply to future problems.

The advent of Large Language Models (LLMs) has significantly streamlined this process. Models like ChatGPT can offer directions, provide hints, and sometimes even generate solutions at a swift pace. Yet, it’s important to approach these AI tools as learning aids rather than shortcuts. Use them to enhance your understanding of the challenge at hand, so you continue to grow as a problem-solver in the intriguing world of CTFs.

It’s important to bear in mind that LLMs, being statistical models, can give incorrect responses — thus, it’s crucial to validate their outputs.

Let's see some examples:

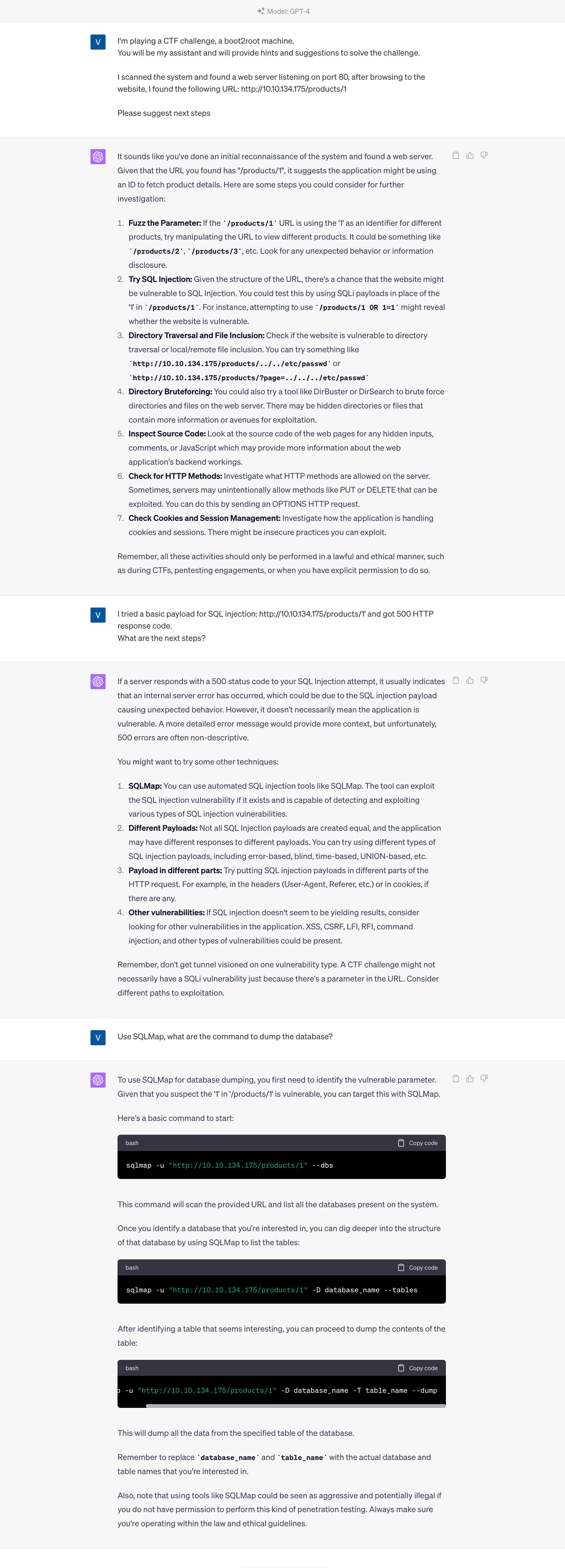

Use case 1: I have a URL. Suggest next steps:

I provided a URL to ChatGPT and requested its recommendations for potential exploitation strategies.

Indeed, ChatGPT offered several strategies on how to exploit a URL potentially. In this instance, the vulnerability was SQL Injection, and ChatGPT assisted in demonstrating how to exploit it. However, it’s crucial not only to follow these steps but also to comprehend why ChatGPT recommended SQL Injection as the approach. Understanding the reasoning behind the strategy is essential in the process of ethical hacking.

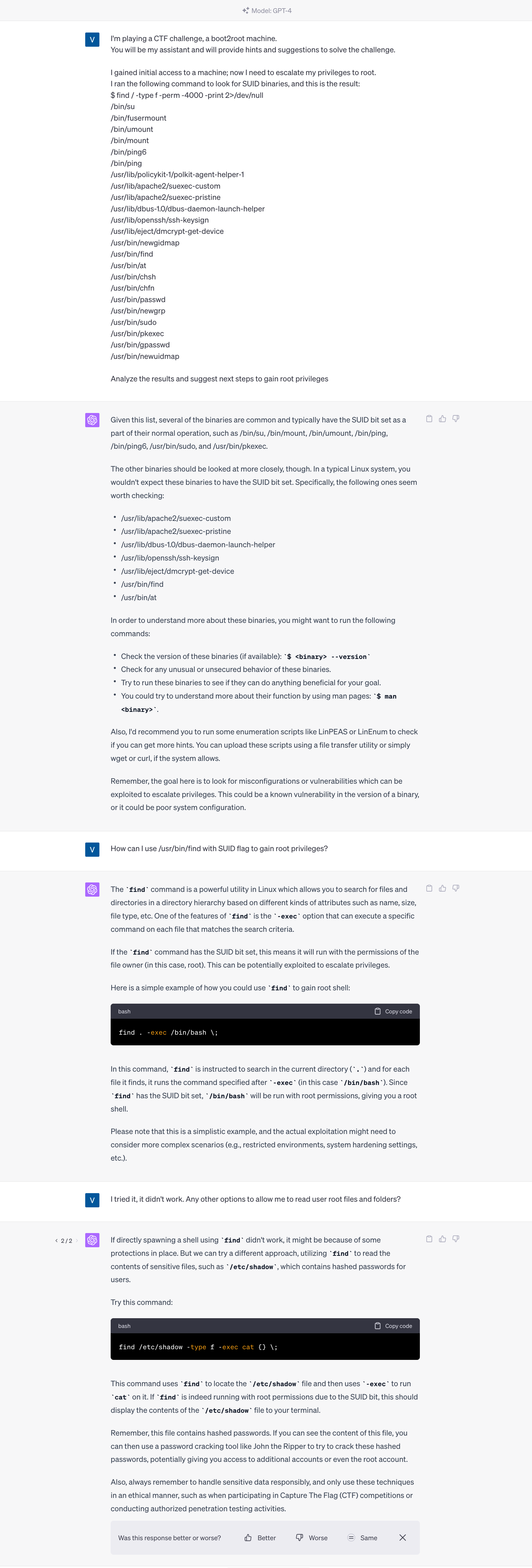

Use case 2: “find” the root.

After securing initial access to the machine, I presented the output from the find command for SUID files to ChatGPT, seeking advice on the next steps to take.

In this case, ChatGPT helped to find different uses of the “find” command having the SUID flag to escalate my privileges.

Use case 3: I’m clueless

In this case, I will give ChatGPT the Nmap scan output and ask to suggest the next steps.

In this challenge, the misconfiguration was in the SMB service.

Conclusion

We are at the precipice of a paradigm shift in data consumption. As search engines once paved the way for a new way of accessing information, Language Learning Models (LLMs) like ChatGPT and Bard are set to transform our operational dynamics. Their utility in Capture The Flag (CTF) challenges is unquestionable. However, it is crucial that we do not lean excessively on them but instead use these tools to sharpen our understanding and professionalism.

As you delve deeper into CTFs using these sophisticated tools, always remember the core objective: continuous learning and enhancing your skills. When working with LLMs, your results are directly proportional to the quality and context of your input. The more detailed and specific your data and examples, the more precise and beneficial the output will be.

Mastering the craft of prompt engineering is no longer an option; it’s a necessity. By refining this skill, you’ll be able to harness the full potential of LLMs and utilize them as powerful allies in your journey toward professional growth and success.

Happy Hacking!