First a disclaimer, this is my own opinion and only mine.

Many people ask me if the Apple silicon CPU/GPU is good for CGI or VFX productions and is it the future ?

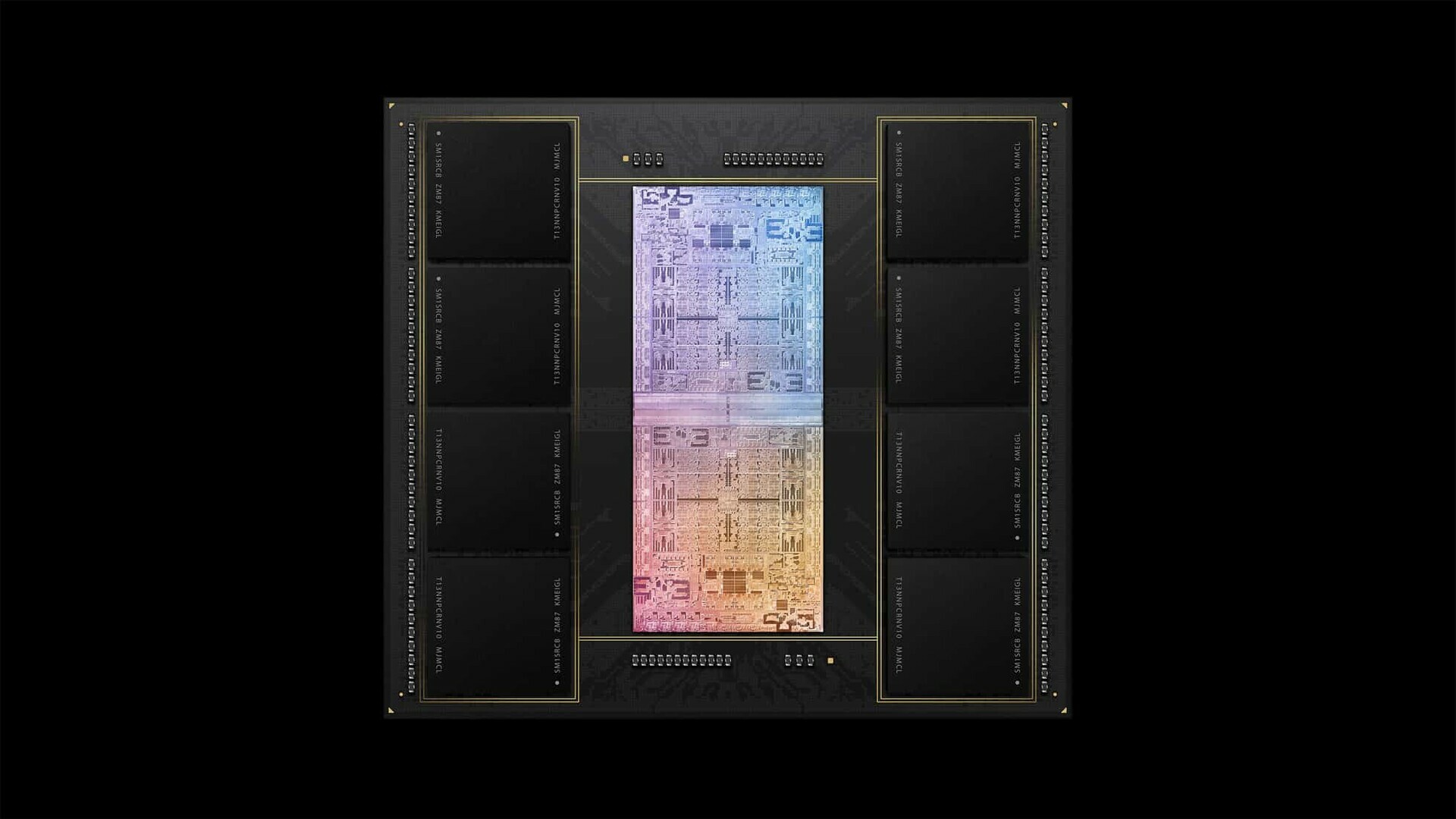

First, let's have a look at the concept of the silicone chip from apple.

Macbooks and Macbooks pro with the M1 chip have 20+ battery life, which is 3-4 times more than Intel laptops or previous MacBooks. Is the M1 so much better than the Intel or AMD chips? mmhh , maybe or is the Battery so much better? I don’t think so, it's still the type of battery.

What really makes it stand out is the concept of CPU and GPU design.

The M1 CPU has its own Memory onboard without the need to move data forth and back from a memory on the mainboard, which already saves electric power usage. next, the GPU cores, which also handle graphics display are also on the same chip. This means data don’t need to be transferred through the mainboard to a graphics card, which needs on a high frequency to be fast. High frequency also needs more power and cooling, that’s the next power savings. the M1 design also has shared memory, meaning CPU and GPU using the same memory, unlike a regular computer, which saves extra work shifting data from CPU memory to GPU memory, also power savings.

Properly the biggest energy saving comes from the different CPU cores. M1 chips have different CPU cores. The regular CPU cores like in Intel or AMD chips and lower power CPU cores. These simpler and slower CPUs cores only need a fraction of the power of a regular full-featured CPU core. And that’s the key. 95% of laptops use is for writing, office work like excel sheets, watching movies or surfing the internet, tasks which the low-power CPU can easily do. And that’s the main reason why the battery lasts so long. If you do raytracing all the time, your battery life will be in a similar range then intel used laptop.

=

So, the concept of M1 Chips is, having multiple processing units for different purposes on 1 chip. CPU cores, low-power CPU cores, GPU cores and AI cores (Nvidia calls its AI chip Tensor cores) are on the chips which a unified memory. This means the 128GB of Ram, like on the M1 Ultra Chip, can be fully used by the GPU cores for graphics or heavy jobs like raytracing. That’s huge! If you wanna have 128GB graphics card memory with Nvidia or AMD cores you need to invest 14k$+ just for graphics cards plus the costs of a PC with a proper mainboard and cooling system. The downside of the concept is, it's not flexible, you can’t upgrade the memory or graphics cards. But to be honest, how many times have you been in the store and bought more memory these Days?

Overall the concept sounds promising and I think its the future for now. This concept is not new, the O2 workstation from Silicon Graphics had the same concept, so did the Nintendo 64 video game console. It didn’t turn out to be successful. At the time, the speed improvements of the intel chips were so huge, they outrun any benefits of the O2 design.

In their current state, the M1 chips hold up pretty well with Intel and AMD CPUs and beat anything in the same price class. Of course, you can outrun the M1 chips with a high-end desktop and water-cooling, if you wanna do rendering but at what cost? Also, the M1 chip still can’t compete with the full beefed-up 3090 Nvidia GPU card in terms of rendering speed. The M1 is designed as an all-purpose workhorse and extremely fast one, but it has a big memory advantage.

Video processing for example is unmatched, it profits from the shared memory, no need to shuffle insane data of a 4k or 8k video between memory types. At the moment, the M1 chip powerful alternative to Intel's CPU dominance and competition is good for the consumer.

A workstation computer the apple silicone chips is a worthy alternative. Even more so once the software gets converted native M1 are applications and take full advantage of the system. Rumours have it, that’s intel and AMD work on similar architecture. the future looks bright!

The M1, M1 pro and M1 max share the same architecture, just different amounts of core and memory. The ultra chip is basically two M1 max chips glued together and data exchange is so fast, it works as one.

The apple silicone can is also attractive as a server or render-farm system. the low-power usage and small form factor are efficient, you don't need big motherboards with fast bus lanes, which bumps up the cost of cooling and power. a huge factor in this area.

The question remains, what is the future? will the default PC chips stay dominant or will the ARM architecture chip design, like Apple M1, take over? From a technical standpoint, the ARM architecture is the most efficient way for running a computer system. Having the right CPU/GPU unit for the right job is power efficient and cheaper. The downside, it's very complicated to write complex software for this kind of system. If AMD comes out with 512 core Threatripper that would be not very power efficient but extremely efficient for software development. It will triumph over power efficiency. Nevertheless for mobile or small devices ARM architecture will be standard just because the power saving is so impactful.

It will rule Desktop machines properly too because having a large amount of full CPU cores unit has its physical limits. Extreme CPU cores need a large mainboard, a lot of electrical power and cooling. We have already reached the physical limit of how much we can compress the CPU into a smaller space, 5mn.(nano-meter). Smaller is impossible because electric photon touch it's other and you get the correct information you need anymore.

On the other side, new software languages and AI improving constantly which helps with software development for abstract parallel processing. I think ARM is the future and it will interesting to see what kind of ARM system the competition bring us. (Intel,Nvidia,AMD ).